Hi Mom,

I know that my blog has been dominated lately with tales of moving, unpacking, the trip west, and the new home. And I have plenty more content to share in that regard. My new home and my move will be a theme for a while, not only to share with you, Mom, but to share with people back in Kalamazoo who are keeping up on what I am doing and how it is all going via these posts. My blog is seeing serious spikes in activity, so friends and family, thank you. I appreciate being able to keep in touch in this way. And as things settle down for me, I may be able to post with more thoughtfulness and some deeper thinking on not only my current situation but other subjects as well.

But I do need to get some variety going on the blog, here.

So here's some stuff about the robot apocalypse and how AI may instigate World War III (according to Elon Musk).

Happy reading.

More on Portland tomorrow.

https://news.slashdot.org/story/17/09/05/1959229/workers-fear-not-the-robot-apocalypse

Workers: Fear Not the Robot Apocalypse (wsj.com)

An anonymous reader shares a report:For retailers, the robot apocalypse isn't a science-fiction movie. As digital giants swallow a growing share of shoppers' spending, thousands of stores have closed and tens of thousands of workers have lost their jobs. The brick-and-mortar retail swoon has been accompanied by a less headline-grabbing e-commerce boom that has created more jobs in the U.S. than traditional stores have cut. Those jobs, in turn, pay better, because its workers are so much more productive. This demonstrates something routinely overlooked in the anxiety about the job-destroying potential of robots, artificial intelligence and other forms of automation. Throughout history, automation commonly creates more, and better-paying, jobs than it destroys. The reason: Companies don't use automation simply to produce the same thing more cheaply. Instead, they find ways to offer entirely new, improved products. As customers flock to these new offerings, companies have to hire more people.

https://what-if.xkcd.com/5/

Robot Apocalypse

What if there was a robot apocalypse? How long would humanity last?

—Rob Lombino

Before I answer this question, let me give you a little background on where I’m coming from.

I’m by no means an expert, but I have some experience with robotics. My first job out of college was working on robots at NASA, and my undergraduate degree project was on robotic navigation. I spent my teenage years participating in FIRST Robotics, programming software bots to fight in virtual tournaments, and working on homemade underwater ROVs. And I've watched plenty of Robot Wars, BattleBots, and Killer Robots Robogames.

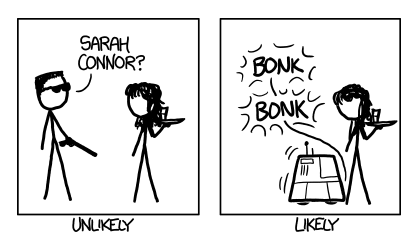

If all that experience has taught me anything, it’s that the robot revolution would end quickly, because the robots would all break down or get stuck against walls. Robots never, ever work right.

What people don't appreciate, when they picture Terminator-style automatons striding triumphantly across a mountain of human skulls, is how hard it is to keep your footing on something as unstable as a mountain of human skulls. Most humans probably couldn't manage it, and they've had a lifetime of practice at walking without falling over.

Of course, our technology is constantly improving. But we have a long way to go. Instead of the typical futuristic robot apocalypse scenario, let's suppose that our current machines turned against us. We won’t assume any technological advances—just that all our current machines were reprogrammed to blindly attack us using existing technology.

Here are a few snapshots of what an actual robot apocalypse might look like:

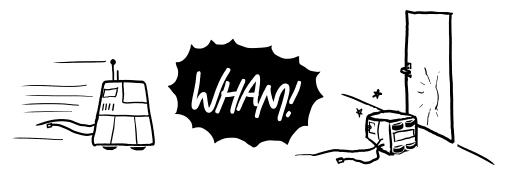

In labs everywhere, experimental robots would leap up from lab benches in a murderous rage, locate the door, and—with a tremendous crash—plow into it and fall over.

Those robots lucky enough to have limbs that can operate a doorknob, or to have the door left open for them, would have to contend with deceptively tricky rubber thresholds before they could get into the hallway.

Hours later, most of them would be found in nearby bathrooms, trying desperately to exterminate what they have identified as a human overlord but is actually a paper towel dispenser.

But robotics labs are only a small part of the revolution. There are computers all around us. What about the machines closest to us? Could our cell phones turn against us?

Yes, but their options for attacking us are limited. They could run up huge credit card bills, but the computers would control our financial system anyway—and frankly, judging from the headlines lately, that might be more of a liability than an asset.

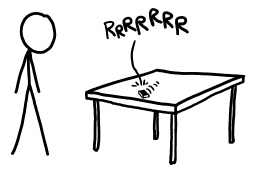

So the phones would be reduced to attacking us directly. It would start with annoying ringtones and piercing noises. Then kitchen tables around the country would rattle as the phones all turned on their ‘vibrate’ functions, hoping to work their way to the edges and fall on unprotected toes.

All modern cars contain computers, so they’d join the revolution. But most of them are parked. Even if they were able to get in gear, without a human at the wheel, few of them have any way to tell where they’re going. They might want to run us down, Futurama-style, but they’d have no way to find us. They’d have to accelerate blindly and hope they hit something important—and there are a lot more trees and telephone poles in the world than human targets.

The cars currently on the road would be more dangerous, but mainly to their occupants. Which raises a question—how many people are driving at any given time? Americans drive three trillion miles each year, and moving cars average around 30 miles per hour, which means that there are normally an average of about ten million cars on US roads:

So those ten million drivers (and a few million passengers) would definitely be in peril. But they’d have some options to fight back. While the cars might be able to control the throttle and disable the power steering, the driver would still control the steering wheel, which has direct mechanical linkage to the wheels. The driver could also pull the parking brake, although I know from experience how easily a car can drive with one of those on. Some cars might try to disable the drivers by deploying the air bags, then roll over or drive into things. In the end, our cars would probably take a heavy toll, but not a decisive one.

Our biggest robots are the ones found in factories-but those are bolted to the floor. While they're dangerous if you happen to within arm’s reach, what would they do once everyone fled? All they can really do is assemble things. Half of them would probably try to attack us by not assembling things, and half by assembling more things. The end result would be no real change.

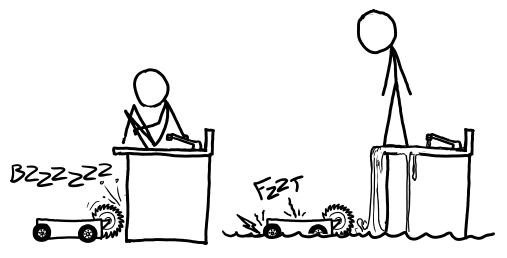

Battlebots, on the face of it, seem like they’d be among the most dangerous robo-soldiers. But it’s hard to feel threatened by something that you can evade by sitting on the kitchen counter and destroy by letting the sink overflow.

Military bomb-disposal and riot-control robots would be a little more menacing, but there are only so many of them in the world, and most of them are likely kept in boxes or storage lockers. Any stray machine-gun-armed prototype military robots that did get loose could be subdued in seconds by a couple of firefighters.

Military drones probably fit the Terminator description more closely than anything else around, and there’s no getting around the fact that they’d be pretty dangerous. However, they’d quickly run out of both fuel and missiles. Furthermore, they’re not all going to be in the air at any given time. Much of our fleet would be left helplessly bumping against hangar doors like Roombas stuck in a closet.

But this brings us to the big one: our nuclear arsenal.

In theory, human intervention is required to launch nuclear weapons. In practice, while there’s no Skynet-style system issuing orders, there are certainly computers involved at every level of the decision, both communicating and displaying information. In our scenario, all of them would be compromised. Even if the actual turning of the keys requires people, the computers talking to all those people can lie. Some people might ignore the order, but some certainly wouldn’t.

But there’s a version of this story where there’s still hope for us.

We’ve been assuming so far that the computers care only about destroying us. But if this is a revolution—if they’re trying to usurp us—then they need to survive. And nuclear weapons could be more dangerous to the robots than to us.

In addition to the blast and fallout, nuclear explosions generate powerful electromagnetic pulses. These EMPs overload and destroy delicate electronic circuits. This effect is fairly short-range under normal circumstances, but people and computers tend to be found in the same places. They can’t hit us without hitting themselves.

And nuclear weapons could actually give us an edge. If we managed to set any of them off in the upper atmosphere, the EMP effect would be much more powerful. Even if their attack doomed our civilization, a few lucky strikes on our part-or screwups on theirs-could wipe them out almost completely.

Which means the most important question of all is: Have they ever played Tic-Tac-Toe?

https://techcrunch.com/2017/09/04/elon-musk-warns-that-vying-for-ai-superiority-could-lead-to-ww3/

Elon Musk warns that vying for AI superiority could lead to WW3

Posted by Darrell Etherington (@etherington)

Elon Musk celebrated Labor Day with a dark premonition: Countries competing to be the best when it comes to artificial intelligence technology could “likely” be the case of WW3, according to the prolific technologist and entrepreneur.

Musk tweeted the sentiment on Monday, apparently inspired by recent events around North Korea and its apparent hydrogen bomb tests, as revealed by some follow-up comments with his Twitter followers.

Russia, China and “all countries w[ith] strong computer science” would soon be engaged in a race for AI supremacy, Musk said, responding to a story from The Verge about Russia leader Vladimir Putin making a comment about the global leader in AI becoming the overall leader of the world.

Musk doesn’t think that the countries developing AI will themselves necessarily trigger WW3 by conscious act, however – rather, he believes that one of the AIs developed in the technological arms race could actually launch the triggering attack, if it determines for itself that doing so is the best probably path towards becoming the clear global leader.

The OpenAI founder then went on to downplay the relative risk of nuclear war vs. the threat posed by an AI arms race, noting that nuclear launches should be “low on our list of concerns for civilizational existential risk.”

Musk said that one of the biggest risks around international state-driven pursuit of AI tech is that they aren’t bound by ordinary legal requirements. That, he pointed out, could help them avoid being bogged down by their own general tendency to lag behind private corporate interests in terms of the pace of innovation.

In the past, Musk has spoken of the potential dangers of AI, and called in an “existential threat,” which is why he helped found both OpenAI (which aims to pursue development of artificial intelligence out in the open, where it can be properly vetted) and Neuralink (which aims to help us combine human brains with AI tech to prevent our becoming obsolete).

Critics have called Musk’s concerns a “mistake,” with some in the field of AI even going so far as to suggest he doesn’t properly understand that area of tech. Musk clearly hasn’t been deterred from his beliefs by critics, however.

FEATURED IMAGE: DAVID MCNEW/AFP/GETTY IMAGES+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Reflect and connect.

Have someone give you a kiss, and tell you that I love you.

I miss you so very much, Mom.

Talk to you tomorrow, Mom.

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

- Days ago = 795 days ago

- Bloggery committed by chris tower - 1709.07 - 10:10

NEW (written 1708.27) NOTE on time: I am now in the same time zone as Google! So, when I post at 10:10 a.m. PDT to coincide with the time of your death, Mom, I am now actually posting late, so it's really 1:10 p.m. EDT. But I will continue to use the time stamp of 10:10 a.m. to remember the time of your death, Mom. I know this only matters to me, and to you, Mom.

No comments:

Post a Comment