|

| http://beautifulpeoplemagazine.com/2017/10/23/type-directly-from-brain/ |

Busy week with a play, a lit mag, work, long days at campus, and so on. So, LOW POWER MODE and straight up shares for the forseeable future.

LOW POWER MODE: I sometimes put the blog in what I call LOW POWER MODE. If you see this note, the blog is operating like a sleeping computer, maintaining static memory, but making no new computations. If I am in low power mode, it's because I do not have time to do much that's inventive, original, or even substantive on the blog. This means I am posting straight shares, limited content posts, reprints, often something qualifying for the THAT ONE THING category and other easy to make posts to keep me daily. That's the deal. Thanks for reading.

FROM - http://www.kurzweilai.net/what-if-you-could-type-directly-from-your-brain-at-100-words-per-minute

What if you could type directly from your brain at 100 words per minute?

Former DARPA director reveals Facebook's secret research projects to create a non-invasive brain-computer interface and haptic skin hearing

April 19, 2017

(credit: Facebook)

Regina Dugan, PhD, Facebook VP of Engineering, Building8, revealed today (April 19, 2017) at Facebook F8 conference 2017 a plan to develop a non-invasive brain-computer interface that will let you type at 100 wpm — by decoding neural activity devoted to speech.

Dugan previously headed Google’s Advanced Technology and Projects Group, and before that, was Director of the Defense Advanced Research Projects Agency (DARPA).

She explained in a Facebook post that over the next two years, her team will be building systems that demonstrate “a non-invasive system that could one day become a speech prosthetic for people with communication disorders or a new means for input to AR [augmented reality].”

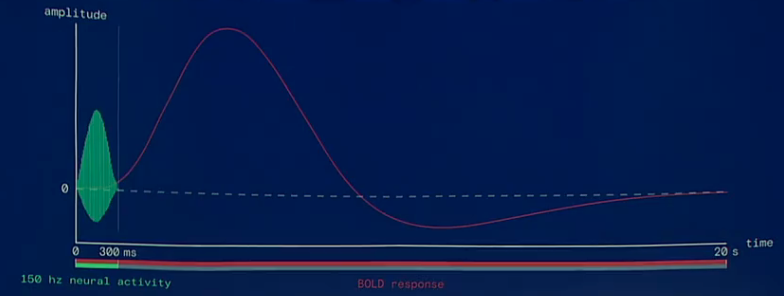

Dugan said that “even something as simple as a ‘yes/no’ brain click … would be transformative.” That simple level has been achieved by using functional near-infrared spectroscopy (fNIRS) to measure changes in blood oxygen levels in the frontal lobes of the brain, as KurzweilAI recently reported. (Near-infrared light can penetrate the skull and partially into the brain.)

Dugan agrees that optical imaging is the best place to start, but her Building8 team team plans to go way beyond that research — sampling hundreds of times per second and precise to millimeters. The research team began working on the brain-typing project six months ago and she now has a team of more than 60 researchers who specialize in optical neural imaging systems that push the limits of spatial resolution and machine-learning methods for decoding speech and language.

The research is headed by Mark Chevillet, previously an adjunct professor of neuroscience at Johns Hopkins University.

Besides replacing smartphones, the system would be a powerful speech prosthetic, she noted — allowing paralyzed patients to “speak” at normal speed.

(credit: Facebook)

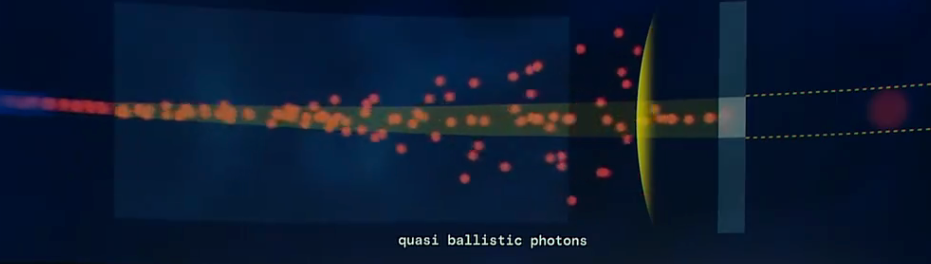

Dugan revealed one specific method the researchers are currently working on to achieve that: a ballistic filter for creating quasi ballistic photons (avoiding diffusion) — creating a narrow beam for precise targeting — combined with a new method of detecting blood-oxygen levels.

Neural activity (in green) and associated blood oxygenation level dependent (BOLD) waveform (credit: Facebook)

Dugan also described a system that may one day allow hearing-impaired people to hear directly via vibrotactile sensors embedded in the skin. “In the 19th century, Braille taught us that we could interpret small bumps on a surface as language,” she said. “Since then, many techniques have emerged that illustrate our brain’s ability to reconstruct language from components.” Today, she demonstrated “an artificial cochlea of sorts and the beginnings of a new a ‘haptic vocabulary’.”

A Facebook engineer with acoustic sensors implanted in her arm has learned to feel the acoustic shapes corresponding to words (credit: Facebook)

Dugan’s presentation can be viewed in the F8 2017 Keynote Day 2 video (starting at 1:08:10).

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

- Bloggery committed by chris tower - 2105.11 - 10:10

- Days ago = 2139 days ago

- New note - On 1807.06, I ceased daily transmission of my Hey Mom feature after three years of daily conversations. I plan to continue Hey Mom posts at least twice per week but will continue to post the days since ("Days Ago") count on my blog each day. The blog entry numbering in the title has changed to reflect total Sense of Doubt posts since I began the blog on 0705.04, which include Hey Mom posts, Daily Bowie posts, and Sense of Doubt posts. Hey Mom posts will still be numbered sequentially. New Hey Mom posts will use the same format as all the other Hey Mom posts; all other posts will feature this format seen here.

No comments:

Post a Comment