A Sense of Doubt blog post #2143 - Section 230 is very good, actually

INAUGURATION COUNTDOWN

21 DAYS to inauguration

Section 230 has been in the news a lot lately because Trump is waging war on it because he doesn't like the social media environment in which so many people malign him on a daily basis and even the social media platforms seemed aligned against him, flagging his content as potentially false.

Trump wants Section 230 repealed, and he had the temerity to veto the defense bill because it did not repeal the bill, which has nothing to do with defense. Though it's beautiful that Congress overturned Trump's veto, the only time this has happened in his "presidency."

Then, he prompted the Senate and the sinister and malevolent Mitch McConnell to address it along with $2000 checks to Americans and his unproven claims of just presidential election fraud. McConnell rolled all three into a single bill as a "poison pill" to get Deomcrats and moderate republicans to swallow if they want Americans to have $2K.

But Section 230 is nothing like Trump claims it to be (big surprise) and it is actually a very good things for the health of the Internet and especially start-up tech companies. It needs to stay in place and if struck down it needs to be returned to law immediately after Biden becomes president AND the democrats win the Senate (fingeres crossed) with the two seats open in Georgia.

So, I decided it was time for a deep dive into this issue -- Section 230 -- for me and maybe for you, too.

The first article is a shorter look at the issue and how Trump's problem is with the FIRST AMENDMENT and FREE SPEECH and not Section 230.

The second is a REALLY deep dive into the provision that is worth understanding if you spend any time at all on the Internet.

I am still in low power mode; however, I had another post planned for today which I post-poned and present this one instead.

Happy Wednesday.

Section 230 Is Good, Actually - EFFector 32.28 |

There are many, many misconceptions—as well as misinformation from Congress and elsewhere—about Section 230, from who it affects and what it protects to what results a repeal would have. To help explain what’s actually at stake when we talk about Section 230, we’ve put together responses to several common misunderstandings of the law.

“You have to choose: are you a platform or a publisher?” We’ll say it plainly here: there is no legal significance to labeling an online service a “platform” as opposed to a “publisher.” Nor does the law treat online services differently based on their ideological “neutrality” or lack thereof. Section 230 explicitly grants immunity to all intermediaries, both the “neutral” and the proudly biased. It treats them exactly the same, and does so on purpose. That’s a feature of Section 230, not a bug.

Our free speech online is too important to be held as collateral in a routine funding bill. Congress must reject President Trump’s misguided campaign against Section 230.

President Trump’s recent threat to “unequivocally VETO” the National Defense Authorization Act (NDAA) if it doesn’t include a repeal of Section 230 may represent the final attack on online free speech of his presidency, but it’s certainly not the first. The NDAA is one of the “must-pass” bills that Congress passes every year, and it’s absurd that Trump is using it as his at-the-buzzer shot to try to kill the most important law protecting free speech online. Congress must reject Trump’s march against Section 230 once and for all.

Under Section 230, the only party responsible for unlawful speech online is the person who said it, not the website where they posted it, the app they used to share it, or any other third party. It has some limitations—most notably, it does nothing to shield intermediaries from liability under federal criminal law—but at its core, it’s just common-sense policy: if a new Internet startup needed to be prepared to defend against countless lawsuits on account of its users’ speech, startups would never get the investment necessary to grow and compete with large tech companies. 230 isn't just about Internet companies, either. Any intermediary that hosts user-generated material receives this shield, including nonprofit and educational organizations like Wikipedia and the Internet Archive.

Section 230 is not, as Trump and other politicians have suggested, a handout to today’s dominant Internet companies. It protects all of us. If you’ve ever forwarded an email, Section 230 protected you: if a court found that email defamatory, Section 230 would guarantee that you can’t be held liable for it; only the author can.

If you’ve ever forwarded an email, Section 230 protected you.

Two myths about Section 230 have developed in recent years and clouded today’s debates about the law. One says that Section 230 somehow requires online services to be “neutral public forums”: that if they show “bias” in their decisions about what material to show or hide from users, they lose their liability shield under Section 230 (this myth drives today’s deeply misguided “platform vs. publisher” rhetoric). The other myth is that if Section 230 were repealed, online platforms would suddenly turn into “neutral” forums, doing nothing to remove or promote certain users’ speech. Both myths ignore that Section 230 isn’t what protects platforms’ right to reflect any editorial viewpoint in how it moderates users’ speech—the First Amendment to the Constitution is. The First Amendment protects platforms’ right to moderate and curate users’ speech to reflect their views, and Section 230 additionally protects them from certain types of liability for their users’ speech. It’s not one or the other; it’s both.

We’ve written numerous times about proposals in Congress to force platforms to be “neutral” in their moderation decisions. Besides being unworkable, such proposals are clearly unconstitutional: under the First Amendment, the government cannot force sites to display or promote speech they don’t want to display or remove speech they don’t want to remove.

It’s not hard to ascertain the motivations for Trump’s escalating war on Section 230. Even before he was elected, Trump was deeply focused on using the courts to punish companies for insults directed at him. He infamously promised in early 2016 to “open up our libel laws” to make it easier for him to legally bully journalists.

No matter your opinion of Section 230, we should all be alarmed that Trump considers a goofy nickname a security threat.

Trump’s attacks on Section 230 follow a familiar pattern: they always seem to follow a perceived slight by social media companies. The White House issued an executive order earlier this year that would draft the FCC to write regulations narrowing Section 230’s liability shield, though the FCC has no statutory authority to interpret Section 230. (Today, Congress is set to confirm Trump’s pick for a new FCC commissioner—one of the legal architects of the executive order.) That executive order came when Twitter and Facebook began to add fact checks to his dubious claims about mail-in voting.

But before, Trump never took the step of claiming that “national security” requires him to be able to use the courts to censor critics. That claim came on Thanksgiving, which also happened to be the day that Twitter users starting calling him “#DiaperDon” after he snapped at a reporter. Since then, he has frequently tied Section 230 to national security. The right to criticize people in power is one of the foundational rights on which our country is based. No matter your opinion of Section 230, we should all be alarmed that Trump considers a goofy nickname a security threat. Besides, repealing Section 230 would do nothing about the #DiaperDon tweets or any of the claims of mistreatment of conservatives on social media. Even if platforms have a clear political bias, Congress can't enact a law that overrides those platforms’ right to moderate user speech in accordance with that bias.

What would happen if Section 230 were repealed, as the president claims to want? Online platforms would become more restrictive overnight. Before allowing you to post online, a platform would need to gauge the level of legal risk that you and your speech bring on them—some voices would disappear from the Internet entirely. It’s shocking that politicians pushing for a more exclusionary Internet are doing so under the banner of free speech; it’s even more galling that the president has dubbed it a matter of national security.

Our free speech online is too important to be held as collateral in a routine authorization bill. Congress must reject President Trump’s misguided campaign against Section 230.

Even though it’s only 26 words long, Section 230 doesn’t say what many think it does.

So we’ve decided to take up a few kilobytes of the Internet to explain what, exactly, people are getting wrong about the primary law that defends the Internet.

Section 230 (47 U.S.C. § 230) is one of the most important laws protecting free speech online. While its wording is fairly clear—it states that "No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider" —it is still widely misunderstood. Put simply, the law means that although you are legally responsible for what you say online, if you host or republish other peoples' speech, only those people are legally responsible for what they say.

But there are many, many misconceptions–as well as misinformation from Congress and elsewhere–about Section 230, from who it affects and what it protects to what results a repeal would have. To help explain what’s actually at stake when we talk about Section 230, we’ve put together responses to several common misunderstandings of the law.

Section 230 should seem like common sense: you should be held responsible for your speech online, not the platform that hosted your speech or another party.

Let’s start with a breakdown of the law, and the protections it creates for you.

How Section 230 protects free speech:

Without Section 230, the Internet would be a very different place, one with fewer spaces where we’re all free to speak out and share our opinions.

One of the Internet’s most important functions is that it allows people everywhere to connect and share ideas—whether that’s on blogs, social media platforms, or educational and cultural platforms like Wikipedia and the Internet Archive. Section 230 says that any site that hosts the content of other “speakers”—from writing, to videos, to pictures, to code that others write or upload—is not liable for that content, except for some important exceptions for violations of federal criminal law and intellectual property claims.

Section 230 makes only the speaker themselves liable for their speech, rather than the intermediaries through which that speech reaches its audiences. This makes it possible for sites and services that host user-generated speech and content to exist, and allows users to share their ideas—without having to create their own individual sites or services that would likely have much smaller reach. This gives many more people access to the content that others create than they would ever have otherwise, and it’s why we have flourishing online communities where users can comment and interact with one another without waiting hours, or days, for a moderator, or an algorithm, to review every post.

And Section 230 doesn’t only allow sites that host speech, including controversial views, to exist. It allows them to exist without putting their thumbs on the scale by censoring controversial or potentially problematic content. And because what is considered controversial is often shifting, and context- and viewpoint- dependent, it’s important that these views are able to be shared. “Defund the police” may be considered controversial speech today, but that doesn’t mean it should be censored. “Drain the Swamp,” “Black Lives Matter,” or even “All Lives Matter” may all be controversial views, but censoring them would not be beneficial.

Online platforms’ censorship has been shown to amplify existing imbalances in society—sometimes intentionally and sometimes not. The result has been that more often than not, platforms are more likely to censor disempowered individuals and communities’ voices. Without Section 230, any online service that did continue to exist would more than likely opt for censoring more content—and that would inevitably harm marginalized groups more than others.

No, platforms are not legally liable for other people’s speech–nor would that be good for users.

Basically, Section 230 means that if you break the law online, you should be the only one held responsible, not the website, app, or forum where you said the unlawful thing. Similarly, if you forward an email or even retweet a tweet, you’re protected by Section 230 in the event that that material is found unlawful. Remember—this sharing of content and ideas is one of the major functions of the Internet, from Bulletin Board Services in the 80s, to Internet Relay Chats of the 90s, to the forums of the 2000s, to the social media platforms of today. Section 230 protects all of these different types of intermediary services (and many more). While Section 230 didn’t exist until 1996, it was created, in part, to protect those services that already existed—and the many that have come after.

What’s needed to ensure that a variety of views have a place on social media isn’t creating more legal exceptions to Section 230.

If you consider that one of the Internet’s primary functions is as a way for people to connect with one another, Section 230 should seem like common sense: you should be held responsible for your speech online, not the platform that hosted your speech or another party. This makes particular sense when you consider the staggering quantity of content that online services host. A newspaper publisher, by comparison, usually has 24 hours to vet the content it publishes in a single issue. Compare this with YouTube, whose users upload at least 400 hours of video [pdf] every minute, an impossible volume to meaningfully vet in advance of publishing online. Without Section 230, the legal risk associated with operating such a service would deter any entrepreneur from starting one.

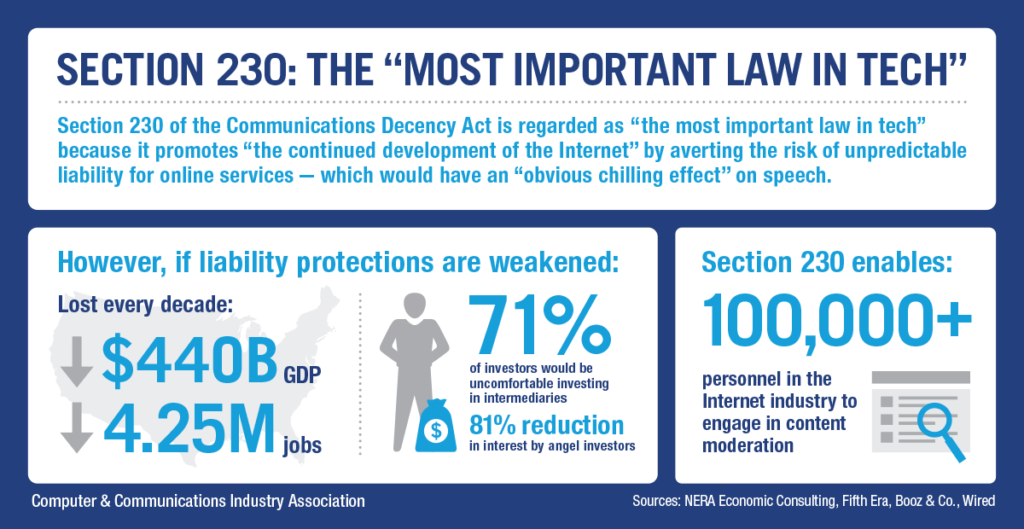

We’ve put together an infographic about how Section 230 works that you can also view to get a quick rundown of how the law protects Internet speech, and a detailed explanation of how Section 230 works for bloggers and comments on blogs, if you’d like to see how this scenario plays out in more detail.

No, Section 230 is not a “hand-out to Big Tech,” or a big tech “immunity, ” or a "gift" to companies. Section 230 protects you and the forums you care about, not just “Big Tech.”

Section 230 protects Internet intermediaries—individuals, companies, and organizations that provide a platform for others to share speech and content over the Internet. Yes, this includes social networks like Facebook, video platforms like YouTube, news sites, blogs, and other websites that allow comments. It also protects educational and cultural platforms like Wikipedia and the Internet Archive.

But it also protects some sites and activities you might not expect—for example, everyone who sends an email, as well as any cybersecurity firm that uses user-generated content for their threat assessments, patches, and advisories. A list of organizations that signed onto a letter about the importance of 230 includes Automattic (makers of Wordpress), Kickstarter, Medium, Github, Cloudflare, Meetup, Patreon, Reddit, for example. But just as important as currently-existing services and platforms are those that don’t exist yet—because without Section 230, it would be cost-prohibitive to start a new service that allows user-generated speech.

No, the First Amendment is not at odds with Section 230.

Online platforms are within their First Amendment rights to moderate their online platforms however they like, and they’re additionally shielded by Section 230 for many types of liability for their users’ speech. It’s not one or the other. It’s both.

Some history on Section 230 is instructive here. Section 230 originated as an amendment to the Communications Decency Act (CDA), which was introduced in an attempt to regulate sexual material online. The CDA amended telecommunications law by making it illegal to knowingly send to or show minors obscene or indecent content online. The House passed the Section 230 amendment with a sweeping majority, 420-4.

The online community was outraged by the passage of the CDA. EFF and many other groups pushed back on its overly broad language and launched a Blue Ribbon Campaign, urging sites to "wear" a blue ribbon and link back to EFF's site to raise awareness. Several sites chose to black out their webpages in protest.

The ACLU filed a lawsuit, which several civil liberties organizations like the EFF as well as other industry groups joined, and which reached the Supreme Court. On June 26, 1997, in a 9-0 decision, the Supreme Court applied the First Amendment by striking down the anti-indecency sections of the CDA. Section 230, the amendment that promoted free speech, was not affected by that ruling. As it stands now, Section 230 is pretty much the only part of the CDA left. But it took several different lawsuits to do that.

No, online platforms are not “neutral public forums.”

But Section 230 only shields an intermediary from liability that already exists. If speech is protected by the First Amendment, there can be no liability either for publishing it or republishing it, regardless of Section 230. As the Supreme Court recognized in the Reno v. ACLU case, the First Amendment’s robust speech protections fully apply to online speech. Section 230 was included in the CDA to ensure that online services could decide what types of content they wanted to host. Without Section 230, sites that removed sexual content could be held legally responsible for that action, a result that would have made services leery of moderating their users’ content, even if they wanted to create online spaces free of sexual content. The point of 230 was to encourage active moderation to remove sexual content, allowing services to compete with one another based on the types of user content they wanted to host.

Moreover, the First Amendment also protects the right of online platforms to curate the speech on their sites—to decide what user speech will and will not appear on their sites. So Section 230’s immunity for removing user speech is perfectly consistent with the First Amendment. This is apparent given that prior to the Internet, the First Amendment gave non-digital media, such as newspapers, the right to decide what stories and opinions it would publish.

No, online platforms are not “neutral public forums.”

Nor should they be. Section 230 does not say anything like this. And trying to legislate such a “neutrality” requirement for online platforms—besides being unworkable—would violate the First Amendment. The Supreme Court has confirmed the fundamental right of publishers to have editorial viewpoints.

It’s also foolish to suggest that web platforms should lose their Section 230 protections for failing to align their moderation policies to an imaginary standard of political neutrality. One of the reasons why Congress first passed Section 230 was to enable online platforms to engage in good-faith community moderation without fear of taking on undue liability for their users’ posts. In two important early cases over Internet speech, courts allowed civil defamation claims against Prodigy but not against Compuserve; since Prodigy deleted some messages for “offensiveness” and “bad taste,” a court reasoned, it could be treated as a publisher and held liable for its users’ posts. Former Rep. Chris Cox recalls reading about the Prodigy opinion on an airplane and thinking that it was “surpassingly stupid.” That revelation led to Cox and then Rep. Ron Wyden introducing the Internet Freedom and Family Empowerment Act, which would later become Section 230.

In practice, creating additional hoops for platforms to jump through in order to maintain their Section 230 protections would almost certainly result in fewer opportunities to share controversial opinions online, not more: under Section 230, platforms devoted to niche interests and minority views can thrive.

Print publishers and online services are very different, and are treated differently under the law–and should be.

It’s true that online services do not have the same liability for their content that print media does. Unlike publications like newspapers that are legally responsible for the content they print, online publications are relieved of this liability by Section 230. The major distinction the law creates is between online and offline publication, a recognition of the inherent differences in scale between the two modes of publication. (Despite claims otherwise, there is no legal significance to labeling an online service a “platform” as opposed to a “publisher.”)

But an additional purpose of Section 230 was to eliminate any distinction between those who actively select, curate, and edit the speech before distributing it and those who are merely passive conduits for it. Before Section 230, courts effectively disincentivized platforms from engaging in any speech moderation. Section 230 provides immunity to any “provider or user of an interactive computer service” when that “provider or user” republishes content created by someone or something else, protecting both decisions to moderate it and those to transmit it without moderation.

“User,” in particular, has been interpreted broadly to apply “simply to anyone using an interactive computer service.” This includes anyone who maintains a website, posts to message boards or newsgroups, or anyone who forwards email. A user can be an individual, a nonprofit organization, a university, a small brick-and-mortar business, or, yes, a “tech company.”

Legacy news media companies—such as a newspaper publisher—may complain that Section 230 gives online social media platforms extra legal protections and thus an unfair advantage. But Section 230 makes no distinction between news entities and social media platforms. And plenty, if not the vast majority, of news media entities publish online—either solely or in tandem with their print editions. When a news media entity publishes online, it gets the exact same Section 230 immunity from liability based on publishing someone else’s content that a social media platform gets.

No, Section 230 does not stop platforms from moderating content.

The misconception that platforms can somehow lose Section 230 protections for moderating users’ posts has gotten a lot of airtime. This is false. Section 230 allows sites to moderate content how they see fit. And that’s what we want: a variety of sites with a plethora of moderation practices keeps the online ecosystem workable for everyone. The Internet is a better place when multiple moderation philosophies can coexist, some more restrictive and some more permissive.

Section 230 reforms (that we've seen) would not make platforms better at moderation.

It’s absolutely a problem that just a few tech companies wield immense control over what speakers and messages are allowed online. It’s a problem that those same companies fail to enforce their own policies consistently or offer users meaningful opportunities to appeal bad moderation decisions.

But without Section 230 there’s little hope of a competitor with fairer speech moderation practices taking hold given the big players’ practice of acquiring would-be competitors before they can ever threaten the status quo.

But there are ways to make content moderation work better for users.

A group of content moderation experts, organizations, advocates, and academic experts agree that the best way to start improving moderation is for companies to implement “The Santa Clara Principles On Transparency and Accountability in Content Moderation.” These principles are a floor, not a ceiling. They state:

- Companies should publish the numbers of posts removed and accounts permanently or temporarily suspended due to violations of their content guidelines.

- Companies should provide notice to each user whose content is taken down or account is suspended about the reason for the removal or suspension.

- Companies should provide a meaningful opportunity for timely appeal of any content removal or account suspension.

Aside from these principles, there are many other ways for users (and Congress) to push for better moderation practices without repealing or modifying Section 230. While large tech companies might clamor for regulations that would hamstring their smaller competitors, they’re notably silent on reforms that would curb the practices that allow them to dominate the Internet today. That’s why EFF recommends that Congress update antitrust law to stop the flood of mergers and acquisitions that have made competition in Big Tech an illusion. Before the government approves a merger, the companies should have to prove that the merger would not increase their monopoly power or unduly harm competition.

But even updating antitrust policy is not enough. Major social media platforms’ business models thrive on practices that keep users in the dark about what information they collect on us and how it’s used. Decisions about what material (including advertising) to deliver to users are informed by a web of inferences about users, inferences that are usually impossible for users even to see, let alone correct.

Because of the link between social media’s speech moderation policies and its irresponsible management of user data, Congress can’t improve Big Tech’s practices without addressing its surveillance-based business models. What’s more, users shouldn’t be held hostage to a platform’s proprietary algorithm. Instead of serving everyone “one algorithm to rule them all” and giving users just a few opportunities to tweak it, platforms should open up their APIs to allow users to create their own filtering rules for their own algorithms. News outlets, educational institutions, community groups, and individuals should all be able to create their own feeds, allowing users to choose who they trust to curate their information and share their preferences with their communities.

In sum: what’s needed to ensure that a variety of views have a place on social media isn’t creating more legal exceptions to Section 230. Rather, companies should institute reasonable, transparent moderation policies. Platforms shouldn’t over-rely on automated filtering and unintentionally silence legitimate speech and communities in the process. And platforms should add features to give users themselves—not platform owners or third parties—more control over what types of posts they see.

No, reforming Section 230 will not hurt Big Tech companies like Facebook and Twitter–but it will hurt smaller platforms and users.

Some people wrongly think that eliminating Section 230 will fix their (often legitimate) concerns about the dominance of online services like Facebook and Twitter. But that won't solve those problems - it will only ensure that major platforms never face significant competition.

Reforming Section 230 would not only fail to punish “Big Tech,” but would backfire in just about every way

Facebook was one of the first tech companies to endorse SESTA/FOSTA, the 2018 law that significantly undermined Section 230’s protections for free speech online, and Facebook is now leading the charge for further reforms to Section 230. Though calls to reform Section 230 are frequently motivated by disappointment in Big Tech’s speech moderation policies, evidence shows that further reforms to Section 230 would make it more difficult for new entrants to compete with Facebook or Twitter—and would likely make censorship worse, not better.

Unfortunately, trying to legislate that platforms moderate certain content more forcefully, or more “neutrally,” would create immense legal risk for any new social media platform—raising, rather than lowering, the barrier to entry for new platforms. Likewise, if Twitter and Facebook faced serious competition, then the decisions they make about how to handle (or not handle) hateful speech or disinformation wouldn’t have nearly the influence they have today on online discourse. If there were twenty major social media platforms, then the decisions that any one of them makes to host, remove, or fact-check the latest misleading post about the election results wouldn’t have the same effect on the public discourse.

Put simply: reforming Section 230 would not only fail to punish “Big Tech,” but would backfire in just about every way, leading to fewer places for people to publish speech online, and to more censorship, not less.

Repealing Section 230 would be disastrous for users.

We don’t have to guess about what would happen if we repeal Section 230. We’ve seen it. SESTA/FOSTA shot a hole right through Section 230, by creating new federal criminal and civil liability for anyone who “owns, manages, or operates an interactive computer service” and speaks, or hosts third-party content, with the intent to “promote or facilitate the prostitution of another person.” Its broad language means that if the owner of an interactive computer service hosts content or viewpoints that might be seen as promoting or facilitating prostitution, or as assisting, supporting or facilitating sex trafficking, the service is liable.

SESTA/FOSTA immediately led to censorship, and to increased risk for sex workers. Organizations that provide support and services to victims of trafficking and child abuse, sex workers, and groups and individuals promoting sexual freedom were implicated by its broad language. Fearing that comments, posts, or ads that are sexual in nature will be ensnared by FOSTA, many vulnerable people have gone offline and back to the streets, where they’ve been sexually abused and physically harmed. Additionally, numerous platforms that host entirely legal speech have had to shut down and self censor.

It’s essential that newer, smaller companies be given the same chance to host speech that those companies had fifteen or twenty years ago

We’ve filed a lawsuit to repeal SESTA/FOSTA on behalf of several plaintiffs who have been harmed by the law.

On a large scale, the wholesale repeal of Section 230 means many, many newer and smaller platforms would simply have to heavily censor anything that could be construed as illegal speech. Many of these sites would stop hosting content entirely, and smaller sites that host content as their primary function would be forced offline. Those who still want to host content would have to use filters and other algorithmic moderation that would cast a wide net, and remaining posts would likely take days before they could be viewed and allowed online.

Larger sites, though, would continue to function relatively similarly, although with much more censorship and much stricter automated moderation. Section 230 is one of the only legal incentives that sites have now to leave up a large amount of content that they would likely take down, from organizations planning a protest to individuals calling for the ouster of a government official.

If online services were liable for more types of content, the Internet would likely be worse, not better.

We know that platforms are notoriously bad at moderation. Even when detailed guidelines for moderators exist, it’s often very hard to apply strict rules successfully to the vast array of types of speech that exist—when someone is being sarcastic, or content is ironic, for example. As a result, creating new categories of speech that online services are liable for hosting would almost certainly result in overbroad takedowns.

Right now, platforms are allowed to mostly create their own rules for how they moderate. Giving the government more power to control speech would not be a remedy for the moderation problems that exist. As an example, social media platforms have long struggled with the problem of extremist or violent content on their platforms. Because there is no international agreement on what exactly constitutes terrorist, or even violent and extremist, content, companies look at the United Nations’ list of designated terrorist organizations or the US State Department’s list of Foreign Terrorist Organizations. But those lists mainly consist of Islamist organizations, and are largely blind to, for example, U.S.-based right-wing extremist groups. And even if there was consensus on what constitutes a terrorist, the First Amendment generally would protect those individuals’ ability to speak absent them making true threats or directly inciting violent acts.

The combination of these lists and blunt content moderation systems leads to the deletion of vital information not available elsewhere, such as evidence of human rights violations or war crimes. It is very difficult for human reviewers—and impossible for algorithms—to consistently get the nuances of activism, counter-speech, and extremist content itself right. The result is that many instances of legitimate speech are falsely categorized as terrorist content and removed from social media platforms. Those false positives, and other moderation mistakes, will fall disproportionately on Muslim and Arab communities. It also hinders journalists, academics, and government officials because they cannot view and or share this content. While sometimes problematic, the documentation and discussion of terrorist acts is essential given that it is one of the most important political and social issues in the world.

With further government intervention into what must be censored, this situation could potentially become much worse, putting marginalized communities and those with views that differ from whoever might be in power in an even more precarious situation online than they already are. Government actors often label political opposition or disempowered groups as terrorists. Section 230 ensures platforms make these choices based on their own calls about what constitutes speech they will not host, not the government’s whims.

We still need Section 230— now more than ever.

Now that a few companies have grown to contain a vast majority of user-generated online content, it’s essential that newer, smaller companies be given the same chance to host speech that those companies had fifteen or twenty years ago. Without Section 230, competition would be unlikely to succeed, because the liability for hosting online content would be so great that only the largest companies could survive the cost of (legitimate or illegitimate) lawsuits that they would have to fight. Additionally, though automated content moderation isn’t likely to succeed at scale, companies who could afford it would be the only ones who could attempt to moderate. We absolutely still need Section 230. In fact, we may need Section 230 even more than when we did in 1997.

The protections of Section 230 aren’t hypothetical. It’s been used to protect users and services in court many, many times.

Section 230 doesn’t just protect the big companies you’ve heard of—it protects all intermediaries equally. Removing that protection would open every intermediary up to lawsuits, forcing all but the largest of them to shut down, or stop hosting user-generated content altogether. And it would be much more difficult for new services that host speech to enter the online ecosystem.

Section 230 has already protected users in court.

The protections of Section 230 aren’t hypothetical. It’s been used to protect users and services in court many, many times.

A few examples: in 2006, women's health advocate Ilena Rosenthal posted a controversial opinion piece written by Tim Bolen onto a Usenet news group. Lawyers argued that Rosenthal was liable for libel, because posting the comments made her a "developer" of the information in question. The California Supreme Court upheld the strong protections of Section 230. Had the court found in favor of the plaintiffs, the implications for free speech online would have been far-reaching: bloggers could be held liable when they quote other people's writing and website owners could be held liable for what people say in message boards on their sites.

In 2007, a third party posted defamatory statements about a company—Universal Communications Systems—on an online Lycos message board. The company sued Lycos, arguing in part that Lycos' registration process and link structure had prompted the statements and extended a type of "culpable assistance" to the author. The court rejected those claims, ruling that Lycos’ services were protected by Section 230. Lycos won the case, thanks to Section 230.

And in 2003, a federal appellate court ruled that Section 230 protected the creator of a newsletter from legal claims by a third party whose email was included in the newsletter. The case recognized that Section 230 protected individual digital publishers from liability based on third-party content, a foundational principle that continues to protect individuals and online services today whenever they host or distribute other people’s content online.

In 2018, a spreadsheet known as the “Shitty Media Men List,” initially created by Moira Donegan, gained recognition for containing individuals which were suspected of mistreatment of female employees. A defamation lawsuit against Donegan was brought by the writer Stephen Elliott, who was named on the list. But The Shitty Media Men list was a Google spreadsheet shared via link and made editable by anyone, making it particularly easy for anonymous speakers to share their experiences with men identified on the list. Because Donegan initially created the spreadsheet as a platform to allow others to provide information, Donegan is likely immune from suit under Section 230. The case is still pending, but we expect the court to rule that she is not liable.

There are dozens of cases like these. But many, many more have never had to go to court, thanks to Section 230’s protections, much of which is now settled law.

No, Section 230 doesn’t mean certain political views are censored more than others.

Some politicians seem to believe (or at least have claimed) that Section 230 results in censorship of certain political views, despite there being no evidence to support the claim. Others seem to believe (or at least have claimed) that Section 230 results in platforms hosting a variety of “dangerous” content. Though it may be easy to point the finger at the platforms, and by extension, at the law that protects those online services from liability for much of the content that users generate, Section 230 is not the problem. As described above, even without Section 230, online services have a First Amendment right to moderate user-generated content.

Reforming online platforms is tough work. Repealing Section 230 may seem like the easy way out, but as mentioned above, no reform to Section 230 that we’ve seen would solve these problems. Rather, reforms would likely backfire--increasing censorship in some cases, and dangerously increasing liability in others.

There’s a lot of confusion around Section 230, but you can help.

The incredible thing about the Internet is that you’re not liable for what someone else wrote, even if you share it with others. Take a minute to exercise this right, and share this blog post, so that more people can get a clearer idea of why Section 230 matters, and how it helps the users of Internet services both big and small.

RELATED UPDATES

One nice thing about democracy is that—at least in theory—we don’t need permission to speak freely and privately. We don’t have to prove that our speech meets the government’s criteria, online or offline. We don’t have to “earn” our rights to free speech or privacy. Times have changed. Today, some U.S....

To commemorate the Electronic Frontier Foundation’s 30th anniversary, we present EFF30 Fireside Chats. This limited series of livestreamed conversations looks back at some of the biggest issues in Internet history and their effects on the modern web.To celebrate 30 years of defending online freedom, EFF invited author, security technologist,...

“You have to choose: are you a platform or a publisher?”It’s the question that makes us pull out our hair and roll our eyes. It’s the question that makes us want to shout from the rooftops “IT DOESN’T MATTER. YOU DON’T HAVE TO CHOOSE”We’ll say it plainly here: there is...

San Francisco—Sen. Ron Wyden, a fierce advocate for the rights of technology users, will join EFF Legal Director Corynne McSherry on Thursday, December 10, for a livestream fireside chat about the fight to defend freedom of expression and innovation on the web.Wyden is an original framer of Section...

Next time you hear someone blame Section 230 for a problem with social media platforms, ask yourself two questions: first, was this problem actually caused by Section 230? Second, would weakening Section 230 solve the problem? Politicians and commentators on both sides of the aisle frequently blame Section 230 for...

The past few months have seen plenty of attempts to undermine Section 230, the law that makes a free Internet possible. But now we’re seeing one from a surprising place: the California League of Cities.To be clear, the League of Cities, an association of city officials from around...

The dangerous EARN IT Act passed the Senate Judiciary Committee last month, and now it’s been introduced in the House of Representatives.Take ActionTell Congress to Reject the Earn It ActWe need your help to stop this anti-speech, anti-security bill. Email your elected officials in both chambers of...

EFF is standing with a huge coalition of organizations to urge Congress to oppose the Online Content Policy Modernization Act (OCPMA, S. 4632). Introduced by Sen. Lindsey Graham (R-SC), the OCPMA is yet another of this year’s flood of misguided attacks on Internet speech (read bill [pdf]). The...

LOW POWER MODE: I sometimes put the blog in what I call LOW POWER MODE. If you see this note, the blog is operating like a sleeping computer, maintaining static memory, but making no new computations. If I am in low power mode, it's because I do not have time to do much that's inventive, original, or even substantive on the blog. This means I am posting straight shares, limited content posts, reprints, often something qualifying for the THAT ONE THING category and other easy to make posts to keep me daily. That's the deal. Thanks for reading.

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

- Bloggery committed by chris tower - 2012.30 - 10:10

- Days ago = 2007 days ago

- New note - On 1807.06, I ceased daily transmission of my Hey Mom feature after three years of daily conversations. I plan to continue Hey Mom posts at least twice per week but will continue to post the days since ("Days Ago") count on my blog each day. The blog entry numbering in the title has changed to reflect total Sense of Doubt posts since I began the blog on 0705.04, which include Hey Mom posts, Daily Bowie posts, and Sense of Doubt posts. Hey Mom posts will still be numbered sequentially. New Hey Mom posts will use the same format as all the other Hey Mom posts; all other posts will feature this format seen here.